While we usually focus on e-commerce insights, in this piece we are pulling back the curtain on the technology that powers them. Haris, from our AI Engineering team, shares a few nuggets from the R&D we conduct to ensure our clients get the most accurate data possible.

Executive Summary

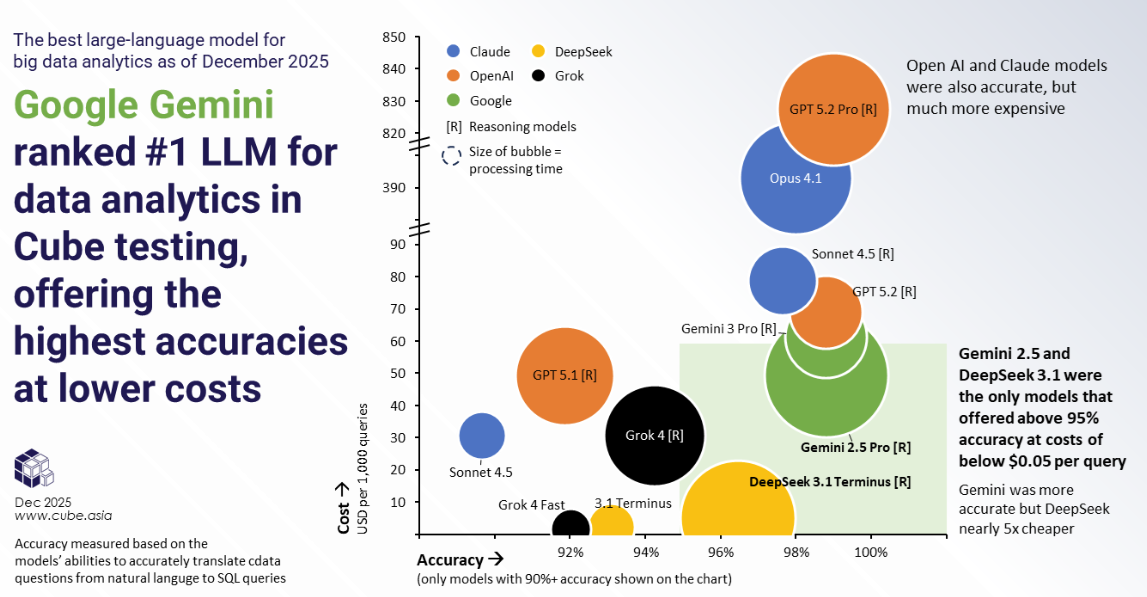

At Cube, we have been testing Large Language Models (LLMs) for nearly 2 years now, using some of the standard analytics we do for our clients as a reference to benchmark their relative performance across 3 dimensions – accuracy, cost, and time. So as we open the curtains on a new year, we thought it would be a good idea to share the results from our latest round of testing – particularly after an exciting period at the end of last year when both Google and OpenAI released their latest models.

This benchmark is based on a specific type of analytics we do, where we use “SQL Agents” to turn plain English questions into precise database code (SQL). For example, a brand manager might ask, “What was the month-over-month revenue growth for our top 3 SKUs in Q4?” The AI must translate that natural language into a complex SQL query to pull the correct numbers from the database.

We then put these models through a “stress test” of such questions, ranging from simple lookups to complex logic puzzles, to see which model wrote the most accurate code, how fast they did it, and how much it cost.

This Pulse report benchmarks leading LLMs to identify the optimal engine for a natural language-to-SQL agent as described above. While cost-effective options exist, our analysis confirms Google’s Gemini models have effectively separated themselves from the pack. Both Gemini 2.5 Pro and 3 Pro models offer top tier accuracy (~98.8%) and much lower costs than OpenAI’s equally accurate GPT 5.2.

Core Finding: Accuracy but at different costs

For the kinds of big-data analytics we do, Gemini 3 Pro and GPT 5.2 were both equally accurate, but at vastly different costs. GPT 5.2 is only about 10% more expensive than Gemini 3, but the GPT 5.2 Pro is more than 10x more expensive.

Near-perfect Accuracy

Gemini 3 Pro achieves a 98.8% average accuracy, matching its previous version (Gemini 2.5 Pro) and beating the strongest competitor (DeepSeek Terminus Reasoning) by over 2%. Crucially, GPT 5.2 (Reasoning) matched this benchmark exactly, also scoring 98.8%.

The OpenAI Comeback

For a while towards the end of last year, it seemed that Google had taken a strong lead in model performance, and OpenAI trailed significantly with GPT 5.1. However, the release of GPT 5.2 erased that deficit. It now matches Gemini 3 Pro’s accuracy step-for-step (98.8%) and has successfully bridged the “attention to detail” gap. The narrative (at least on performance alone) has shifted from “Gemini vs. the rest” to a direct face-off between two equals.

The Speed Question

Historically, a key barrier to adopting high-reasoning models (at least as an embedded feature in self-serve data dashboards) has been latency. The previous market leader, Gemini 2.5 Pro, required an agonizing average of 216 seconds (3 minutes, 36 seconds) to generate a single complex query. Competitors have done better, with DeepSeek Terminus Reasoning taking 189 seconds and GPT 5.1 (Reasoning) clocking in at 139 seconds.

Gemini 3 Pro made headlines with its 95-second response time, but GPT 5.2 has gone even faster, clocking in at an average of 76.5 seconds. This makes GPT 5.2 roughly 20% faster than Gemini 3 Pro. Both models have successfully moved “Reasoning” capabilities from experimental features to viable production tools, but OpenAI currently holds the speed crown. Nonetheless, with wait times of over 1 minute, these models remain unusable for end-user deployment of the use cases that we are testing at least.

The Competitive Landscape & Trade-offs to consider

Trade-off 1: Cost

High intelligence comes at a premium. Gemini 3 Pro costs $0.061 per query, while GPT-5.2 is slightly more expensive at $0.069 per query. While both are significantly pricier than DeepSeek (approximately $0.005 per query), they are the only viable options for mission-critical accuracy.

Trade-off 2: The “Reasoning” Latency Tax

Despite the speed improvements in Gemini 3 Pro and OpenAI GPT 5.2, “Reasoning” models impose an inherent latency tax compared to standard models. While standard models like DeepSeek Terminus execute in roughly 33 seconds, users must still wait 1.5 minutes for Gemini 3 Pro and GPT 5.2. For many applications, this wait may be necessary: in our experience, enabling reasoning is the only way to maintain >96% accuracy on “Hard” complexity questions. Standard models drop to approximately 86% accuracy on these tasks, a risk level unacceptable for reliable reporting in our use cases.

Trade-off 3: DeepSeek as the “Almost” Contender

DeepSeek V3.1 Terminus (Reasoning) represents an impressive technical feat, achieving a respectable 96.4% accuracy. Its primary failure is speed; at 189 seconds, it is remarkably slow and lacks the fine-grained edge-case handling found in Gemini. Consequently, DeepSeek serves as a “good enough” option for strict budget constraints, whereas OpenAI GPT 5.2 and Gemini 3 Pro remain the “best” option for performance requirements.

Trade-off 4: Speed vs. Efficiency

The choice at the top tier is no longer about capability, but optimization. Gemini 3 Pro remains the most cost-efficient of the elite models ($0.061), saving ~13% on every query compared to GPT 5.2, provided you can tolerate a slightly longer wait (95s). Whereas, GPT 5.2 is the speed king. If your user experience demands the fastest possible “Reasoning” response (76s), the extra cost is a necessary premium.

Conclusion & Recommendation

The data indicates a clear hierarchy in LLMs for building agents for data analytics. Gemini 3 Pro and GPT 5.2 stand alone as the winner overall, being the only models to combine peak accuracy (98.8%) with low cost (less than US$ 0.07 per query) and tolerable latency (less than 100s).

Final Verdict:

For a production-ready agent where data integrity is paramount, the field has narrowed to two choices. GPT 5.2 takes the crown for raw speed, while Gemini 3 Pro retains the edge in cost efficiency. Both have rendered previous leaders obsolete.